When distributing live video online, there’s a lot to consider. Ensuring a high-quality experience for viewers across the globe is no easy feat. What’s more, many content distributors struggle to minimize the delay between when content is captured and when it plays back on end-user devices. Called video latency, this is a critical factor for interactive use cases.

In this article, we detail what video latency is possible in 2024, tips for reducing streaming lag, and and explore strategies for businesses to achieve real-time streaming with a focus on maintaining a superior quality of service and audience experience.

To give you a bit context, this article is about the workflow and use case of live streaming from one source to many participants, for any audience size anywhere in the world. Video communication applications like Skype, Teams, Zoom etc. usually do not have latency issues – everything needs to happen in real time anyways. However, these video meeting tools are limited in scale and group size, and usually do not work well in web browsers.

Table of contents

What Is Video Latency?

Video latency is the lag between when a video is captured and when it’s displayed on a viewer’s screen. This delay can be measured in seconds. Although there’s no streaming industry standard for latency terminology, at nanocosmos we break it up into the following four categories:

- High latency: In many streaming workflows, the latency is somewhere north of 30 seconds. This is because standard HTTP-based protocols like HLS default to 6-second segment lengths, and three segments are required before a video will play back. This delay is only acceptable for linear programming, and even then, it’s not ideal.

- Typical latency: Typical latency sits between 6 and 30 seconds. Many live news and sports broadcasters are comfortable with this delay. But as second screen experiences, watch parties, and interactive overlays become more common, the industry is looking to close this gap.

- Low latency: When a stream is tuned for low latency, it reaches viewers 1 to 6 seconds after the video is captured. Many social media sites fall in this range, which is why there’s often a disconnect between when a comment shows up on the screen and when it’s acknowledged by the person streaming.

- Ultra low latency or real-time: Sub-second streaming falls in the category of ultra-low latency and it’s ideal for all real-time entertainment. Interactive content like gaming, virtual events, online fitness, and more should always aim for this target. As such, you’ll see us use ‘real-time,’ ‘ultra-low latency,’ and ‘sub-second’ to refer to the same thing throughout this article.

You may be surprised to read about “long” and “typical” latency of several seconds! Well, this is caused by the nature of video streaming. With the invention of the HLS protocols by Apple some years ago, streaming became simpler than before, but this protocol induced a significant latency into the video stream. This did not matter back then, when live video streaming was less prominent and more used for TV-like “broadcast” applications.

When Does Low Latency Matter?

So why is video latency problematic, anyway? The most popular example is that of hearing your neighbor celebrate a goal while the live sports broadcast that you’re watching lags behind. But there are much more dire reasons to reduce latency.

For video experiences that require two-way participation, the effects of video latency can be disastrous. Imagine attempting to place a bid during a live auction, only to find out 30 seconds later that the item has been sold for a much lower price than you were willing to offer. In this case, the delay would result in a terrible experience for viewers and lost revenue for the auction house.

As mentioned, not all live streaming applications need low latency. In broadcast applications like OTT (Over-The-Top) without interaction, latency isn’t a critical factor. The primary concern might be synchronizing “audience cheers” with other broadcast channels such as cable TV or radio, placing it in the “medium-low” latency range of approximately 6-10 seconds.

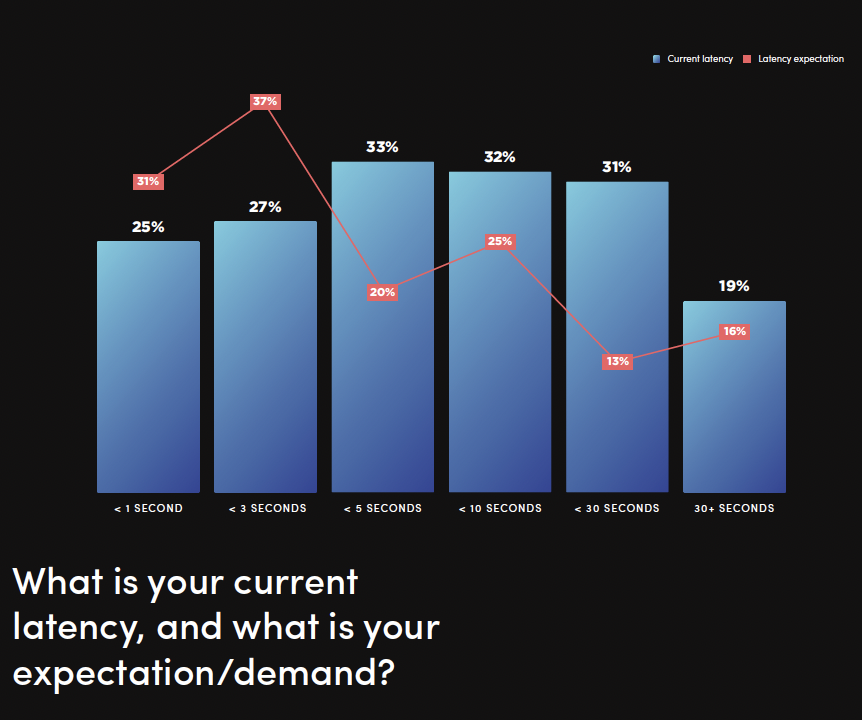

As you can see in the below graph from Bitmovin’s 2022/2023 Video Developer Report, in such broadcasting environments delays of more than five seconds are the norm. However, in what we refer to as “lean forward scenarios,” maintaining a latency under one second is imperative. Without achieving this swift responsiveness, monetization and interactive engagement with the content become unfeasible.

Who Needs Low-Latency Streaming?

Almost any interactive use case where viewers are able to influence the content through participation requires real-time streaming. Minimizing the delay is key to maximizing engagement.

Here’s a closer look at some of the video-based experiences where ultra-low latency is a must:

- Online gambling and igaming. In today’s online gambling environments, players make split-second decisions while watching live events. Online casinos, sportsbooks, and other igaming platforms need ultra-low latency streaming to ensure their participant’s bets are placed on time. And with new use cases like microbetting gaining momentum, the requirement for speedy video delivery continues to grow.

- Live commerce and real-time auctions. When it comes to live shopping, timing is everything. Buyers are motivated by the urgency that real-time streaming delivers. What’s more, the ability to chat during a makeup demo, place a bid during an auction, or purchase the last remaining item before it’s sold all requires sub-second latency.

- Town halls and live events. Anyone who’s participated in a Zoom meeting knows that video delay can be awkward and frustrating. As more events go virtual, enterprises are looking to power real-time collaboration across distances. Replicating the in-person experience starts with reducing latency. It also ensures that meetings are productive and events are engaging.

What Causes Latency When Live Streaming?

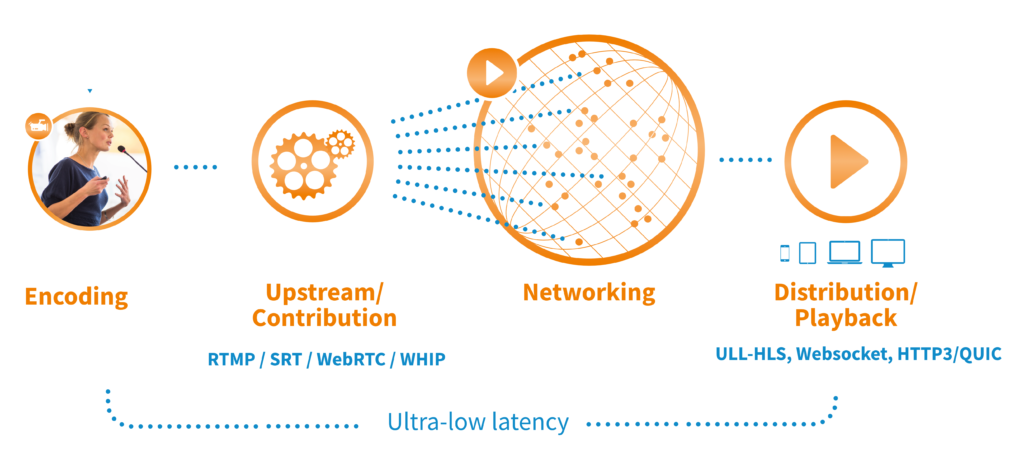

Online video delivery is complex. The glass-to-glass journey includes a ton of steps — including ingest, transcoding, delivery, and player buffering.

Not only is it time- and resource-intensive to build out an end-to-end streaming workflow — it also requires a certain level of expertise. That’s why many organizations choose to partner with a company like nanocosmos that specializes in real-time streaming infrastructure.

Here are the spots where latency can build up across a streaming workflow:

- Encoding, transcoding, and packaging: From your on-site encoder to the transcoding service that you use to further process your content, many aspects of the encoding pipeline can inject latency. High bitrates, different codecs, and first-mile upload all contribute to lengthy delays.

- Delivery format and segment size: As mentioned above, standard protocols like HLS and DASH weren’t designed with speed in mind. The actual size of each segment (or chunk) is often a primary driver lag. What’s more, it’s impossible to get in the seb-second target without an ultra-low latency format like H5Live or WebRTC.

- Delivery to end-users: Engaging online experiences shouldn’t be limited by geographic boundaries. But the farther your viewers are from the edge servers, the longer it’ll take for the video to play. You’ll want to look for a CDN that addresses your specific needs for lightning-fast delivery.

- Playback: Many specifications require a minimum number of segments to be loaded before a stream will play. This results in a buffer between when a user clicks play and when the live content actually appears. Finding a player with fast startup time will address this final hang up — giving your audience the instant gratification they demand.

How Can Latency Be Reduced?

Latency can creep in at every stage of the workflow, so tackling it requires a comprehensive approach. Optimizing all the stages requires expertise and takes attention away from your main business goals.

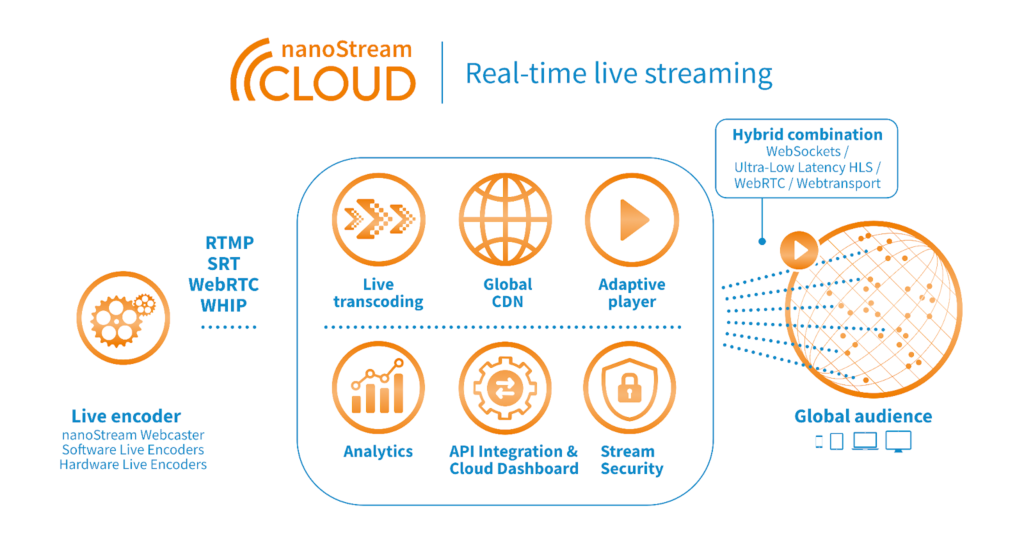

On the other hand, solutions like nanoStream Cloud handle the technology for you and connect your team with 25+ years of audio and video streaming expertise.

Here’s a closer look at where video delays build up. As you’ll see, the easiest way to ensure high quality real-time delivery is by controlling the entire streaming pipeline with an end-to-end platform.

1. Efficient Encoding

Whether you’re using an open-source encoder like OBS Studio, a hardware encoder like Osprey Talon, or a browser-based solution like nanoStream Webcaster, keeping latency low starts here. You’ll want to select an encoder that offers support for the low-latency contribution formats listed below. It’s also imperative that your encoder can be configured accordingly and integrated with a live streaming platform for large-scale delivery.

2. Low-Latency Protocols

Creating top-notch streaming experiences goes beyond the protocols you select. There are many protocols available on different platforms and networks, so it may be confusing to decide which to use. A platform solution like nanoStream Cloud will take the burden from you to decide which protocol is best using automatic detection.

Here’s a breakdown of the lowest-latency streaming protocols in use:

- SRT: As an ingest format, SRT is often used to transport live content to a streaming platform like nanoStream Cloud. From there, the video is repackaged into a format that’s suitable for playback. SRT ensures quick delivery over suboptimal networks, making it an ideal format for use cases like live auctions, news, and more.

- WebRTC: WebRTC is an ultra-low-latency protocol that works for both ingest and delivery. The peer-to-peer technology was designed for chat-based collaboration and supports browser-based encoding. This makes it great for town hall meetings and other scenarios where you’d want to go live from your laptop. That said, scaling video delivery to thousands of viewers requires additional infrastructure (which we at nanocosmos provide).

- Low-Latency HLS: Apple’s newest spec seeks to overcome the lengthy delay that’s always plagued HLS. The protocol sits at 2-5 seconds, which means it’s still on the higher end for what some use cases require.

- Media Over QUIC: As an emerging technology that we recently showcased at IBC 2023, Media Over QUIC is designed to provide simple, low-latency media distribution and ingestion. Its advantages include a single handshake to establish the connection, roaming support as users switch between Wi-Fi and cellular, congestion control, and more.

- H5Live: The only technology that can compete with WebRTC in terms of delivery speed is our very own H5Live. Based on WebSockets, Low-Latency HLS, and fragmented MP4, H5Live ensures sub-second streaming at scale. Rather than being a proprietary technology, it intelligently matches the end-users device with one of these formats and can be easily embedded in any browser

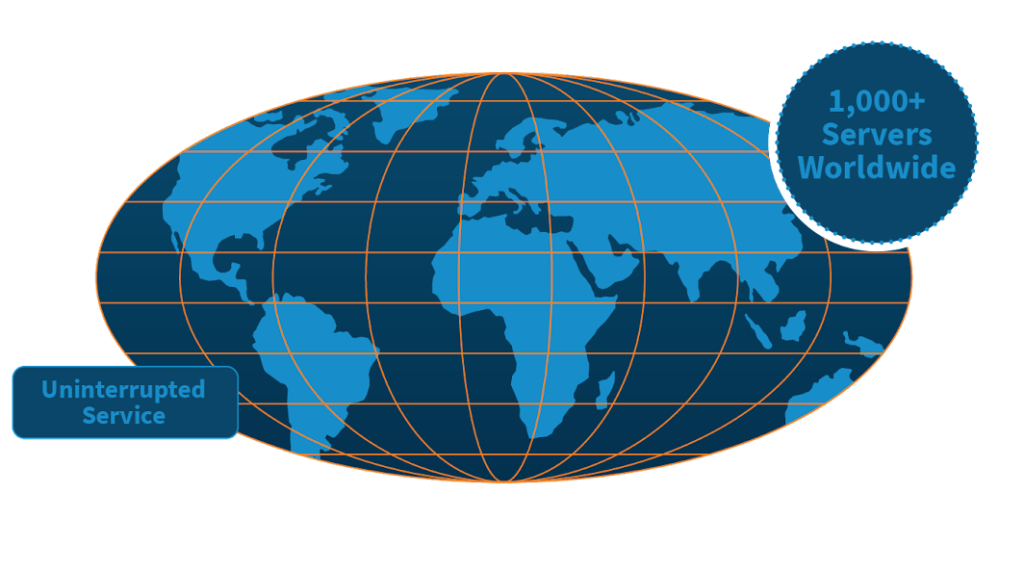

3. Ultra-Low-Latency Content Delivery Network (CDN)

The content delivery network (CDN) is the core of a live streaming platform. It is the workhorse of online video — ensuring that content reaches every corner of the world without buffering or interruptions (regardless of audience size). But guaranteeing a smooth viewing experience for interactive video workflows takes more than a traditional CDN.

When you use a solution like nanoStream Cloud, that combines an ultra-low latency CDN with a powerful video infrastructure, quick video distribution is the name of the game. With more than 1,100 servers globally, our streaming infrastructure ensures sub-second streaming at a global scale. We designed it to reach any audience without downtime using dynamic auto-scaling and automatic failover.

4. Live Metadata Support

Keeping the conversation going in interactive video environments requires maintaining a continuous feedback loop with your audience. Interactive overlays like chat functionality, polling, and betting are only possible with timed metadata.

You’ll want to look for a video platform that offers live metadata support. This ensures the interactive elements your users demand can be embedded into live content.

5. Adaptive HTML5 Player

All of the considerations detailed above will amount to very little without a ultra-low-latency player like nanoStream H5Live Player, a key part of nanoStream Cloud real-time video platform. Adaptive bitrate delivery, fast video startup time, and support for low-latency protocols are all key features to look for (that our player delivers).

6. End-to-End Video Platform

Getting low-latency video right is hard. All of the components detailed above need to work together efficiently. And unless you’re building the next Netflix from the ground up, this is only possible with a complete solution that supports large-scale delivery across the globe.

You can only ensure high quality of experiences with ultra-low latency with an end-to-end platform built for just that. Integrating analytics, CDN, and player functionalities into a unified solution ensures the consistent and reliable delivery of your streams across all platforms. With this approach, operators can focus on monitoring metrics and implementing necessary adjustments to ensure consistently excellent user experiences. This means you can focus on your business while still maintaining full control over the video experience.

Other Considerations When Building Interactive Video Experiences

Keeping your audience captivated goes beyond quick delivery times. It’s about crafting a top-notch experience and service that is high-quality, scalable and user-friendly. This way, businesses can focus on what they do best. There are also other important features to consider when developing an interactive video application to fulfill this goal, as outlined below.

- Analytics: Who cares if your content reaches viewers quickly if their experience is terrible? Insight into the user experience is critical, which is why you’ll want an analytics solution that provides insight into the complete stream performance.

- Security: Securing content is often vital for monetization. Beyond just protecting your streams, it also protects your users and your reputation. Finding a platform with robust security measures also improves reliability, so this isn’t a place to skimp.

- Ease of integration: Unless you’re building all your infrastructure in house, you’ll want to select a platform that plays nicely with others. Finding a video API that can be integrated with your favorite tools and existing workflows will keep your life simple.

How to Get Started With nanoStream Cloud

We designed nanoStream Cloud to power the engaging video experiences that demand real-time delivery. Our solution combines all the requirements detailed in this article into a comprehensive solution so you are in complete control over your video experience.

If you’re ready to build unparalleled experiences that drive business results, there’s no time to waste. Get started with a free trial today.

Discover how Media Over QUIC (MoQ) is transforming real-time streaming with ultra-low latency and seamless global delivery—explore the benefits, use cases … here.